Download Convert Xml To Csv Sql Server For Mac

The WbExport exports contents of the database into external files, e.g. Plain text ('CSV') or XML.

The WbExport command can be used like any other SQL command (such as UPDATE or INSERT). This includes the usage in scripts that are run in. The WbExport command exports either the result of the next SQL Statement (which has to produce a result set) or the content of the table(s) specified with the -sourceTable parameter. The data is directly written to the output file and not loaded into memory. The export file(s) can be compressed ('zipped') on the fly. Can import the zipped (text or XML) files directly without the need to unzip them. If you want to save the data that is currently displayed in the result area into an external file, please use the feature.

You can also use the to export multiple tables. When using a SELECT based export, you have to run both statements ( WbExport and SELECT) as one script. Either select both statements in the editor and choose SQL → Execute selected, or make the two statements the only statements in the editor and choose SQL → Execute all. You can also export the result of a SELECT statement, by selecting the statement in the editor, and then choose SQL → Export query result.

When exporting data into a Text or XML file, the content of BLOB columns is written into separate files. One file for each column of each row.

Text files that are created this way can most probably only be imported using SQL Workbench/J as the main file will contain the filename of the BLOB data file instead of the actual BLOB data. The only other application that I know of, that can handle this type of imports is Oracle's SQL.Loader utility. If you run the text export together with the parameter -formatFile=oracle a control file will be created that contains the appropriate definitions to read the BLOB data from the external file. 20.1. Memory usage and WbExport WbExport is designed to directly write the rows that are retrieved from the database to the export file without buffering them in memory (except for the XLS and XLSX formats) Some JDBC drivers (e.g.

PostgreSQL, jTDS and the Microsoft driver) read the full result obtained from the database into memory. In that case, exporting large results might still require a lot of memory. Please refer to the chapter for details on how to configure the individual drivers if this happens to you. Value for -type parameter Description xlsm This is the plain XML ('SpreadsheetML') format introduced with Office 2003. This format is always available and does not need any additional libraries. Files with this format should be saved with the extension xml (otherwise Office is not able to open them properly) xls This is the old binary format using by Excel 97 up to 2003. To export this format, only poi.jar is needed.

If the library is not available, this format will not be listed in the export dialog ('Save data as.' ) Files with this format should be saved with the extension xls xlsx This is the 'new' XML format (OfficeOpen XML) introduced with Office 2007. To create this file format, additionaly libraries are required. If those libraries are not available, this format will not be listed in the export dialog ('Save data as.' ) Files with this format should be saved with the extension xlsx For a comparison of the different Microsoft Office XML formats please refer to: You can download all required POI libraries as a single archive from the SQL Workbench/J home page:.

After downloading the archive, unzip it into the directory where sqlworkbench.jar is located. Parameter Description -type Possible values: text, sqlinsert, sqlupdate, sqldeleteinsert, sqlmerge, xml, ods, xlsm, xls, xlsx, html, json Defines the type of the output file.

Download Convert Xml To Csv Sql Server For Mac Download

Sqlinsert will create the necessary INSERT statements to put the data into a table. If the records may already exist in the target table but you don't want to (or cannot) delete the content of the table before running the generated script, SQL Workbench/J can create a DELETE statement for every INSERT statement. To create this kind of script, use the sqldeleteinsert type. Sqlmerge will generate statements that will result in INSERT or UPDATE type of statements. The exact syntax depends on the current database.

To select a syntax for a different DBMS, use the parameter -mergeType. In order for this to work properly the table needs to have keycolumns defined, or you have to define the keycolumns manually using the -keycolumns switch. Sqlupdate will generate UPDATE statements that update all non-key columns of the table. This will only generate valid UPDATE statements if at least one key column is present. If the table does not have key columns defined, or you want to use different columns, they can be specified using the -keycolumns switch. Ods will generate a spreadsheet file in the OpenDocument format that can be opened e.g. With OpenOffice.org.

Xlsm will generate a spreadsheet file in the Microsoft Excel 2003 XML format ('XML Spreadsheet'). When using Microsoft Office 2010, this export format should should be saved with a file extension of.xml in order to be identified correctly. Xls will generate a spreadsheet file in the proprietary (binary) format for Microsoft Excel (97-2003). The file poi.jar is required. Xlsx will generate a spreadsheet file in the default format introduced with Microsoft Office 2007. Additional external libraries are required in order to be able to use this format. Please read the note at the beginning of this section.

This parameter supports auto-completion.file The output file to which the exported data is written. This parameter is ignored if -outputDir is also specified.createDir If this parameter is set to true, SQL Workbench/J will create any needed directories when creating the output file.sourceTable Defines a list of tables to be exported. If this switch is used, -outputdir is also required unless exactly one table is specified. If one table is specified, the -file parameter is used to generate the file for the table. If more then one table is specified, the -outputdir parameter is used to defined the directory where the generated files should be stored. Each file will be named as the exported table with the approriate extension (.xml,.sql, etc).

You can specify. as the table name which will then export all tables accessible by the current user. If you want to export tables from a different user or schema you can use a schema name combined with a wildcard e.g.

In this case the generated output files will contain the schema name as part of the filename (e.g. When these files, SQL Workbench/J will try to import the tables into the schema/user specified in the filename. If you want to import them into a different user/schema, then you have to use the -schema switch for the command. This parameter supports auto-completion.schema Define the schema in which the table(s) specified with -sourceTable are located. This parameter only accepts a single schema name. If you want to export tables from more than one schema, you need to fully qualify them as shown in the description of the -sourceTable parameter. This parameter supports auto-completion.types Selects the object types to be exported.

By default only TABLEs are exported. If you want to export the content of VIEWs or SYNONYMs as well, you have to specify all types with this parameter.sourceTable=.types=VIEW,SYNONYM or -sourceTable=T% -types=TABLE,VIEW,SYNONYM This parameter supports auto-completion.excludeTables The tables listed in this parameter will not be exported. This can be used when all but a few tables should be exported from a schema. First all tables specified through -sourceTable will be evaluated. The tables specified by -excludeTables can include wildcards in the same way, -sourceTable allows wildcards.sourceTable=.excludeTables=TEMP. will export all tables, but not those starting with TEMP. This parameter supports auto-completion.sourceTablePrefix Define a common prefix for all tables listed with -sourceTable.

When this parameter is specified the existence of each table is not tested any longer (as it is normally done). When this parameter is specified the generated statement for exporting the table is changed to a SELECT.

FROM prefixtableName instead of listing all columns individually. This can be used when exporting views on tables, when for each table e.g. A view with a certain prefix exists (e.g. Table PERSON has the view VPERSON and the view does some filtering of the data. This parameter can not be used to select tables from a specific schema.

The prefix will be prepended to the table's name.outputDir When using the -sourceTable switch with multiple tables, this parameter is mandatory and defines the directory where the generated files should be stored.continueOnError When exporting more than one table, this parameter controls whether the whole export will be terminated if an error occurs during export of one of the tables.encoding Defines the encoding in which the file should be written. Common encodings are ISO-8859-1, ISO-8859-15, UTF-8 (or UTF8).

To get a list of available encodings, execut WbExport with the parameter -showencoding. This parameter is ignored for XLS, XLSX and ODS exports. This parameter supports auto-completion and if it is invoked for this parameter, it will show a list of encodings defined through the configuration property workbench.export.defaultencodings This is a comma-separated list that can be changed using -showEncodings Displays the encodings supported by your Java version and operating system.

If this parameter is present, all other parameters are ignored.lineEnding Possible values are: crlf, lf Defines the line ending to be used for XML or text files. Crlf puts the ASCII characters #13 and #10 after each line. This is the standard format on Windows based systems. Dos and win are synonym values for crlf, unix is a synonym for lf. Lf puts only the ASCII character #10 at the end of each line.

This is the standard format on Unix based systems ( unix is a synonym value for this format). The default line ending used depends on the platform where SQL Workbench/J is running. This parameter supports auto-completion.header Possible values: true, false If this parameter is set to true, the header (i.e. The column names) are placed into the first line of output file. The default is to not create a header line.

You can define the default value for this parameter in the file. This parameter is valid for text and spreadsheet (OpenDocument, Excel) exports.compress Selects whether the output file should be compressed and put into a ZIP archive. An archive will be created with the name of the specified output file but with the extension zip. The archive will then contain the specified file (e.g. If you specify data.txt, an archive data.zip will be created containing exactly one entry with the name data.txt). If the exported result set contains BLOBs, they will be stored in a separate archive, named datalobs.zip. When exporting multiple tables using the -sourcetable parameter, then SQL Workbench/J will create one ZIP archive for each table in the specified output directory with the filename 'tablename'.zip.

For any table containing BLOB data, one additional ZIP archive is created.tableWhere Defines an additional WHERE clause that is appended to all SELECT queries to retrieve the rows from the database. No validation check will be done for the syntax or the columns in the where clause. If the specified condition is not valid for all exported tables, the export will fail.clobAsFile Possible values: true, false For SQL, XML and Text export this controls how the contents of CLOB fields are exported. Usually the CLOB content is put directly into the output file When generating SQL scripts with WbExport this can be a problem as not all DBMS can cope with long character literals (e.g. Oracle has a limit of 4000 bytes). When this parameter is set to true, SQL Workbench/J will create one file for each CLOB column value. This is the same behaviour as with BLOB columns.

Text files that are created with this parameter set to true, will contain the filename of the generated output file instead of the actual column value. When importing such a file using WbImport you have to specify the -clobIsFilename=true parameter.

Otherwise the filenames will be stored in the database and not the clob data. This parameter is not necessary when importing XML exports, as WbImport will automatically recognize the external files.

SQL exports ( -type=sqlinsert) generated with -clobAsFile=true can only be used with SQL Workbench/J. All CLOB files that are written using the encoding specified with the -encoding switch. If the -encoding parameter is not specified the will be used.lobIdCols When exporting CLOB or BLOB columns as external files, the filename with the LOB content is generated using the row and column number for the currently exported LOB column (e.g. If you prefer to have the value of a unique column combination as part of the file name, you can specify those columns using the -lobIdCols parameter. The filename for the LOB will then be generated using the base name of the export file, the column name of the LOB column and the values of the specified columns. If you export your data into a file called userinfo and specify -lobIdCols=id and your result contains a column called img, the LOB files will be named e.g. Userinfoimg344.data -lobsPerDirectory When exporting CLOB or BLOB columns as external files, the generated files can be distributed over several directories to avoid an excessive number of files in a single directory.

The parameter lobsPerDirectory defines how many LOB files are written into a single directory. When the specified number of files have been written, a new directory is created. The directories are always created as a sub-directory of the target directory. The name for each directory is the base export filename plus 'lobs' plus a running number.

So if you export the data into a file 'thebigtable.txt', the LOB files will be stored in 'thebigtablelobs1', 'thebigtablelobs2', 'thebigtablelobs3' and so on. The directories will be created if needed, but if the directories already exist (e.g. Because of a previous export) their contents will not be deleted! -filenameColumn When exporting CLOB or BLOB columns as external files, the complete filename can be taken from a column of the result set (instead of dynamically creating a new file name based on the row and column numbers). This parameter only makes sense if exactly one BLOB column of a table is exported.append Possible values: true, false Controls whether results are appended to an existing file, or overwrite an existing file. This parameter is only supported for text, SQL, XLS and XLSX export types.

When used with XLS oder XSLX exports, a new worksheet will be created.dateFormat The date to be used when writing date columns into the output file. This parameter is ignored for SQL exports.timestampFormat The to be used when writing datetime (or timestamp) columns into the output file. This parameter is ignored for SQL exports.locale The locale (language) to be used when formatting date and timestamp values. The language will only be relevant if the date or timestamp format contains placeholders that are language dependent (e.g. The name of the month or weekday).

This parameter supports code-completion.blobType Possible values: file, dbms, ansi, base64, pghex This parameter controls how BLOB data will be put into the generated SQL statements. By default no conversion will be done, so the actual value that is written to the output file depends on the JDBC driver's implementation of the Blob interface. It is only valid for Text, SQL and XML exports, although not all parameter values make sense for all export types. The type base64 is primarily intended for Text exports.

The type pghex is intended to be used for export files that should be imported using PostgreSQL's COPY command. The types dbms and ansi are intended for SQL exports and generate a representation of the binary data as part of the SQL statement. DBMS will use a format that is understood by the DBMS you are exporting from, while ansi will generate a standard hex based representation of the binary data. The syntax generated by the ansi format is not understood by all DBMS! Two additional SQL literal formats are available that can be used together with PostgreSQL: pgDecode and pgEscape. PgDecode will generate a hex representation using PostgreSQL's function. Using decode is a very compact format.

PgEscape will use PostgreSQL's, and generates much bigger statements (due to the increase escaping overhead). When using file, base64 or ansi the file can be imported using The parameter value file, will cause SQL Workbench/J to write the contents of each blob column into a separate file. The SQL statement will contain the SQL Workbench/J specific extension to read the blob data from the file. For details please refer to. If you are planning to run the generated SQL scripts using SQL Workbench/J this is the recommended format. Note that SQL scripts generated with -blobType=file can only be used with SQL Workbench/J The parameter value ansi, will generate 'binary strings' that are compatible with the ANSI definition for binary data.

MySQL and Microsoft SQL Server support these kind of literals. The parameter value dbms, will create a DBMS specific 'binary string'. MySQL, HSQLDB, H2 and PostgreSQL are known to support literals for binary data. For other DBMS using this option will still create an ANSI literal but this might result in an invalid SQL statement. This parameter supports auto-completion.replaceExpression -replaceWith Using these parameters, arbitrary text can be replaced during the export.replaceExpression defines the regular expression that is to be replaced.replaceWith defines the replacement value.replaceExpression='( n r n)' -replaceWith=' ' will replace all newline characters with a blank. The search and replace is done on the 'raw' data retrieved from the database before the values are converted to the corresponding output format. In particular this means replacing is done before any takes place.

Because the search and replace is done before the data is converted to the output format, it can be used for all export types (text, xml, Excel.). Only character columns ( CHAR, VARCHAR, CLOB, LONGVARCHAR) are taken into account.trimCharData Possible values: true, false If this parameter is set to true, values from CHAR columns will be trimmed from trailing whitespace.

This is equivalent to the in the connection profile.showProgress Valid values: true, false, Control the update frequence in the status bar (when running in GUI mode). The default is every 10th row is reported. To disable the display of the progress specify a value of 0 (zero) or the value false. True will set the progress interval to 1 (one). Parameter Description -delimiter The given string sequence will be placed between two columns. The default is a tab character ( -delimiter= t -rowNumberColumn If this parameter is specified with a value, the value defines the name of an additional column that will contain the row number.

The row number will always be exported as the first column. If the text file is not created with a header ( -header=false) a value must still be provided to enable the creation of the additional column.quoteChar The character (or sequence of characters) to be used to enclose text (character) data if the delimiter is contained in the data. By default quoting is disabled until a quote character is defined.

To set the double quote as the quote character you have to enclose it in single quotes: -quotechar=' -quoteCharEscaping Possible values: none, escape, duplicate Defines how quote characters that appear in the actual data are written to the output file. If no quote character has been with the -quoteChar switch, this option is ignored. If escape is specified, a quote character that is embedded in the exported data is written as here is a ' quote character. If duplicate is specified, a quote character that is embedded in the exported data is written as two quotes e.g. Here is a ' quote character. This parameter supports auto-completion.quoteAlways Possible values: true, false If quoting is enabled (via -quoteChar), then character data will normally only be quoted if the delimiter is found inside the actual value that is written to the output file.

If -quoteAlways=true is specified, character data will always be enclosed in the specified quote character. This parameter is ignored if not quote character is specified.

If you expect the quote character to be contained in the values, you should enable character escaping, otherwise the quote character that is part of the exported value will break the quote during import. NULL values will not be quoted even if this parameter is set to true. This is useful to distinguish between NULL values and empty strings.decimal The decimal symbol to be used for numbers. The default is a dot e.g. The number Pi would be written as 3.14152 When using -decimal=',' the number Pi would be written as: 3,14152 -maxDigits Defines a maximum number of decimal digits. If this parameter is not specified decimal values are exported according to the Specifying a value of 0 (zero) results in exporting as many digits as available.fixedDigits Defines a fixed number of decimal digits. If this parameter is not specified decimal values are exported according to the -maxDigits parameter (or the global default).

If this parameter is specified, all decimal values are exported with the defined number of digits. If -fixedDigits=4 is used, the value 1.2 to be written as 1.2000. This parameter is ignored if -maxDigits is also provided.escapeText This parameter controls the escaping of non-printable or non-ASCII characters. Valid options are. Parameter Description -table The given tablename will be put into the tag as an attribute.decimal The decimal symbol to be used for numbers.

The default is a dot (e.g. 3.14152) -useCDATA Possible values: true, false Normally all data written into the xml file will be written with escaped XML characters (e.g. This is a title With -useCDATA=false (the default) a HTML value would be written like this: This is a title -xsltParameter A list of parameters (key/value pairs) that should be passed to the XSLT processor.

When using e.g. The wbreport2liquibase.xslt stylesheet, the value of the author attribute can be set using -xsltParameter='authorName=42'.

This parameter can be provided multiple times for multiple parameters, e.g. When using wbreport2pg.xslt: -xsltParameter='makeLowerCase=42' -xsltParameter='useJdbcTypes=true' -stylesheet The name of the XSLT stylesheet that should be used to transform the SQL Workbench/J specific XML file into a different format. If -stylesheet is specified, -xsltoutput has to be specified as well.xsltOutput This parameter defines the output file for the XSLT transformation specified through the -styleSheet parameter -verboseXML Possible values: true, false This parameter controls the tags that are used in the XML file and minor formatting features. The default is -verboseXML=true and this will generate more readable tags and formatting.

However the overhead imposed by this is quite high. Using -verboseXML=false uses shorter tag names (not longer then two characters) and does put more information in one line.

This output is harder to read for a human but is smaller in size which could be important for exports with large result sets. Parameter Description -title The name to be used for the worksheet -infoSheet Possible values: true, false Default value: false If set to true, a second worksheet will be created that contains the generating SQL of the export.

All items are tested for key functionality and listed as 'Ready for reuse', 'Ready for resale'. Other Info We are R2 2013 certified facility. Please message me with any questions or concerns you may have before bidding or buying.  Be aware that my hours of operation are 9am-5pm Pacific, M-F.

Be aware that my hours of operation are 9am-5pm Pacific, M-F.

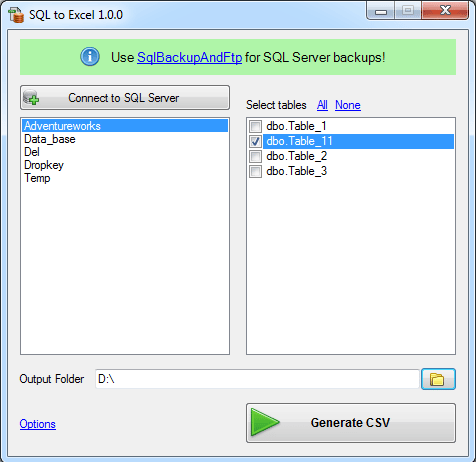

MSSQL to CSV Latest version 1.3 released MSSQL-to-CSV is a free program to convert MS SQL databases into comma separated values (CSV) files. The program has high performance due to direct connection to source databases and writing into.csv files (it does not use ODBC or any other middleware software).

Command line support allows to script, automate and schedule the conversion process. Features. All versions of Microsoft SQL servers are supported (including Azure SQL).

Option to convert individual tables. Option to select separator: tab, comma or semicolon. Option to store conversion settings into profile.

Easy-to-use wizard-style interface. Full install/uninstall support.

Unlimited 24/7 support service. Freeware! Requirements. Necessary privileges to read the source database on MS SQL server.